Causality

For standard probabilistic queries, it does not matter whether our model is causal or not.

It matters only that it encodes the “right” distribution. The difference between causal models and probabilistic models arise when we care about interventions in the model —situations where we do not simply observe the values that variables take but can take actions that can manipulate these values.

Thus, our goal is to isolate the specific issue of understanding causal relationships between variables.

One approach to modeling causal relationships is using the notion of ideal interventions — interventions of the form do(Z := z), which force the variable Z to take the value z and have no other immediate effect.

Intervention Queries:

They correspond to a settings query where we set the variables in Z to take the value z, observe the values x for the variables in X, and wish to find the distribution over the variables Y.

Correlation and Causation

A correlation between two variables X and Y can arise in multiple settings:

○ when X causes Y ,

○ when Y causes X, or

○ when X and Y are both effects of a single cause.

If we observe two variables X,Y to be probabilistically correlated in some observed distribution, what can we infer about the causal relationship between them?

In practice, however, there is a huge set of possible latent variables, representing factors that exist in the world but that we cannot observe and often are not even aware of.

A latent variable may induce correlations between the observed variables that do not correspond to causal relations between them, and hence forms a confounding factor in our goal of determining causal interactions.

There are many cases where correlations might also arise due to non-causal reasons.

The correlation between a pair of variables X and Y may be a consequence of multiple mechanisms, where some are causal, and others are not.

To answer a causal query regarding an intervention at X, we need to disentangle these different mechanisms, and to isolate the component of the correlation that is due to the causal effect of X on Y.

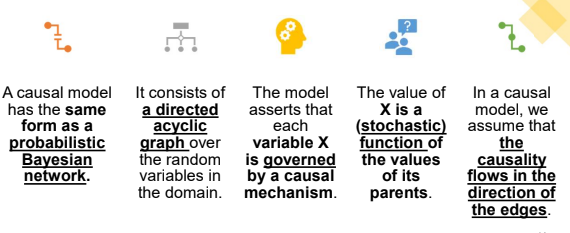

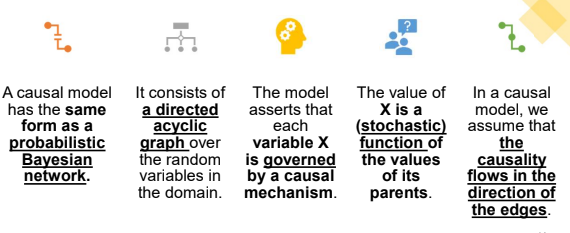

Causal Models

In the mutilated network BZ=z, we eliminate all incoming edges into each variable Zi ∈ Z, and set its value to be zi with probability 1.

Based on this intuition, we can now define a causal model as a model that can answer intervention queries using the appropriate mutilated network.